OpenAI Assistant vs RAG Part 1: Significant Limitations and Possibilities

Too soon to say Open AI kills the entire RAG and API-oriented app. Given a few iterations they may get there. Eventually? Or will they?

Too soon to say Open AI kills the entire RAG and API-oriented app. Given a few iterations they may get there. Eventually? Or will they?

“Bigger, smarter, faster, cheaper, easier.” Isn’t that what we all want?

When I read the OpenAI dev announcement, I had two thoughts.

This is the dream. My dev work with AI is getting easier and better than ever. If all the promises are true, I’m ready to vendor lock in with OpenAI. I dreamt about the day when developing RAG is getting easier, especially at the scale speed and performance level. I cannot wait to try the new API with big documents full of tables. The disappointment quickly came after as I used it with a naive approach, but that will be discussed later

I like ChatPDF, no kidding but it is the first app to encourage me to start developing RAG. Even though I’ve been working with LLM and a bit of RAG for a while before ChatPDF, having a plugin as ChatPDF in ChatGPT reminds me of the good old days when I was a mobile developer and spent all day long working on the mobile app and tried to get it to publish on the store. For that reason, I knew that the day ChatGPT opened its store would come soon.

But with this announcement, what will be the future of ChatPDF and more generally, what will be the future of the entire API market app?

Let’s start with #2 because I think it is more important than the performance comparison between OpenAI Assistant and RAG which we will discuss in-depth in another post.

I have learnt and experienced and witnessed the hard lessons during the time rocking with start-ups back to a few years ago and later learnt it from a book: Zero to One by Blake Masters and Peter Thiel.

launching a business based on someone else’s product a fast track to both quick profits or crashing fast?

Let me put it this way to make that sentence make more sense

API is also mean Ask Public for Insights. Clearly the company that owns API has the data and everything ready at the first hand. They just need to know the idea of what they can do with their data and there is nothing better asking public for it

If their API is the original tech behind your app, what makes you think they cannot replicate your app overnight?

It has been quite a long time since the model “build it and it will come” works. Startups nowadays without a strategy or unique business model and more importantly, without a “moat” will likely fade, no kidding.

The term “moat,” popularized by legendary Warren Buffett, refers to a business’s ability to maintain competitive advantages over its competitors in order to protect its long-term profits and market share. Just like a medieval castle, the moat serves to protect those inside the fortress and their riches from outsiders.

And Warren Buffet is my role model when it comes to investment.

Back to our story, let’s start with a quick update from OpenAI Dev Day.

ChatGPT now supports multiple PDFs. Humm..

Yes, after all the effort from developers with multiple ChatPDF plug-ins. OpenAI has now officially made those apps obsolete. With this upgrade, ChatGPT can now read PDFs directly and supports PDFs, pictures, and CSVs in the same chat as other document types. The ChatGPT platform has been made more versatile and user-friendly by allowing users to submit papers and start asking questions right away. Previously, users could only post photos in the default mode.

Furthermore, users no longer need to indicate which ChatGPT mode they wish to utilize. These days, you can utilize DALL-E 3, Browse, and Advanced Data Analysis (previously known as Code Interpreter Code Interpreter) directly in the same chat. When the user requests that GPT make a picture, for example, GPT will decide when it is suitable to activate the various modes.

So, if you can read CSV, image and PDF and do some quick data analysis at the same time from a friendly user interface, why do you need to pay for a separate plug-in? You probably won’t because $20/month for ChatGPT is more than enough.

No wonder this update is entirely destroy a few dozen startups, including familiar names like ChatPDF, AskYourPDF, and PDF.ai. Those apps were built under the impression that ChatGPT could not interact directly with PDF before. Since now ChatGPT can operate PDF, then these newcomers have what business space can be tapped?

What will happen for AI-oriented “packaged startups”?

The AI-oriented packaged startups are essentially businesses that “package” APIs like ChatGPT to form their own business, using the underlying technology of chatbots to provide a service that the original vendors couldn’t provide directly.

Clearly, ChatPDF and anything alike will fade the extinction because of this update. Not only the ChatPDF but also start up with tech-to-speech also may face the disruption.

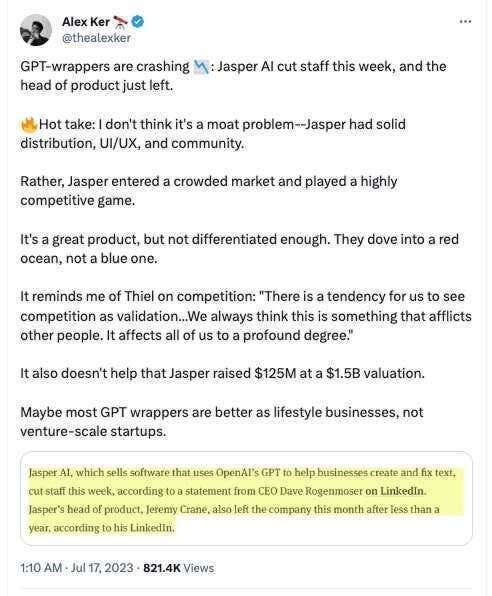

If you need to see a better example of “OpenAI kill my start up” then look into our beloved JasperAI

As the company’s internal valuation has fallen, its business positioning seems to be in trouble, according to technology outlet The Information. In July, Jasper AI also announced layoffs.

It didn’t help that Jasper raised $125M at a $1.5B valuation. Maybe the GPT packaging model isn’t right for startups. Maybe this is the time VCs need to reevaluate the startups that they’ve funded because clearly overfunded due to overhype among VCs on API packages startups without a moat will soon face extinction.

Again. API is also mean Ask Public for Insights. Clearly the company that owns API has the data and everything ready at the first hand. They just need to know the idea of what they can do with their data and there is nothing better asking public for it

So they only need to gather public opinion and see the public interest to know what they should do. So clever. They control every aspect of the API, so what makes you think your app is better once they roll out the same version of your app?

Only if your app is more popular, fair price and a moat that even the company that owns the API cannot replicate then you can survive.

Will any growth prospects for AI packaged startup?

The AI-packaged app will remain and stay, it is simple because OpenAI cannot develop every single feature for all the needs out there.

Let’s take the RAG base ChatPDF for example. If it is just an app where you can upload multiple PDFs and ask questions then you are doomed. There is no moat of that, however, if your app can do much better than that. For e.g

Allow users to search across all of the documents, not only a group or single documents like ChatGPT?

Develop an enterprise app for businesses where employees can upload a PDF file to storage and it will be indexed/embedded and ready for your enquiries.

Domain-knowledge chatbot for enterprise is something that you could think of as SaaS.

Graph knowledge?

And one thing that ChatGPT is suck at.. processing tables.

There is a need for that, especially a RAG pipeline like #2 and #3. OpenAI will keep improving and maybe one day they will work on that idea above, but for now, if your moat is neat at tables processing and get answers more correctly, you already won.

And for sure, the need for an enterprise RAG-based app like #2 and #3 is needed. I don’t see or know anyone who is working on advanced enterprise RAG-based apps as SaaS and general LLM are not good enough for domain knowledge with a lot of jargon. If you happen to know, please reach out to me or leave a comment below.

Another aspect to look at is an example of PDF.ai. It is one of the most profitable, self-sufficient, and profitable companies around PDF. Damon Chen, founder of PDF.ai, says:

“We’re not aiming to be yet another unicorn, a few million dollars in annual recurring revenue is good enough for me.”

The OpenAI update has had a significant impact on PDF.ai. If you make a few million dollars and now be disrupted by OpenAI, not a problem, cash out and move on to a new project.

With that thought and as long as GPT exists then GPT packaging solutions will grow and thrive because of the majority of people, making money based on the package is still more interesting than building something that is sustainable and has a “moat”.

Surprisingly, despite many headlines with “OpenAI just killed my startup”, many remain optimistic. Sahar Mor, head of product at payments provider Stripe, says: “There is still a real value in building user-friendly interfaces and easier-to-use features, so vertical startups targeting specific segments will continue to dominate. The real risk is mainly for AI startups that extend horizontally”

What I understand from this is there is no chance for small players to come to the market to compete with OpenAI or Anthropic. If you aim to provide yet another close-source LLM to compete with those above, you need a lot a lot a lot of cash in hand and a really good relationship with big enterprises so they can start using your product just like with AWS or Azure. META (formerly Facebook) knows this. They know it is hard to compete with established names in the market but they don’t want them to rule the game, so what they did was brilliant. They open-source their model. They open-source a project that took a few years with the collective wisdom of the brightest minds on earth that cost them multi-billion. Why? because that is how they compete, to water down the first-comer effect.

Will AI Assistant kill LLM frameworks such as LlamaIndex and Langchain?

There is a long answer for this but I know you want the short answer. So, it is BIG NOO. At least for now.

Someone has tweeted the category of startups that are potentially being killed by OpenAI.

About the #1. I can’t comment on this yet. Each OpenAI competitor has their unique abilities, so users will decide who wins.

I have quickly tried the AI Assistant and compared it to the RAG pipeline that I developed and boys, I don’t have to say how disappointed I am. I shouldn’t expect too much when I see the AI Assistant on the Dev Day and dream about the day and developing RAG is getting super easy and I can spend more time on the “full-pipeline” aspect. A lot of people have done some quick analysis and comparison on this already, myself included and I think this topic deserves a separate post.

There are a few reasons I believe AI Assistant will need a lot of iteration sprints and releases before it can get better compared to complex RAG.

Give more fine-grained control to the user. At the time of writing, you use AI Assistant API as a black box, there is no such important aspect you can control such as fine-tuning embedding model, chunking, reranking, top-n relevant, fine-tuning embedding, etc.

AGAIN, need a better approach to processing the table and answering the question about the table more correctly.

Here are some quick comparisons and you can see them.

Why do I talk about the processing table, especially the PDF with full of tables so much? Because handling PDF with unstructured tables is like a holy grail to most of my RAG applications.

Up until now, table processing has been something quite a trade-off between speed and accuracy. UnstructeredIO has been very good but if you need to get all the tables from PDF with UnstructeredIO then the time processing is real pain.

I have written a post about the challenges of developing RAG with tables here

RAG Pipeline Pitfalls: The Untold Challenges of Embedding Table

I love the quick guide to building the Chatbot and when I first started, diving into the world of AI and chatbots it was a thrilling ride. I always get a kick out of those quick guides that show you how to whip up a Chatbot. It’s pretty magical to see how just a few lines of code can bring a bot to life. It’s like, with just a dash of code, you’ve creat…

Currently, LlamaIndex has seamlessly incorporated the OpenAI Assistant into its framework, showcasing the remarkable speed and efficiency of the team behind this integration.

Having said that, you can integrate LlamaIndex seamlessly with your intricate RAG alongside the OpenAI assistant. Additionally, you have the option to utilize your vector database for the OpenAI Assistant in conjunction with LlamaIndex. As of the time of writing this post, LlamaIndex has introduced a multi-modal model designed to process images. So the possibilities are full of excitement and I can’t wait to see the developments in the upcoming year.

Summary

Vertical AI apps without massive cash in hand to acquire customers won’t be able to compete with OpenAI or Anthropic

Horizontal AI apps will survive and thrive. There are need for niche areas where general LLM cannot tap yet.

A need for the RAG base chatbot as a SaaS enterprise.

Open source is here to stay and it is our best chance to not tie into vendor lock-in, competition is always good and it pushes the development of this space further

The OpenAI Assistant doesn’t quite match up to the sophistication of a complex RAG pipeline. Rather than choosing one over the other, it’s often beneficial to integrate them as a combination.

Happy birthday 1-year LlamaIndex. It is fascinating to see how much the entire LLM space has progressed in a year. Thank you all

❤ If you found this post helpful, I’d greatly appreciate your support by giving it a clap. It means a lot to me and demonstrates the value of my work. Additionally, you can subscribe to my substack as I will cover more in-depth LLM development in that channel

Want to Connect?

If you need to reach out, don't hesitate to drop me a message via my

Twitter or LinkedIn and subscribe to my Substack, as I will cover

more learning practices, especially the path of developing LLM

in depth in my Substack channel.